The Future of Data Annotation: Trends and Innovation

Data annotation plays a crucial role in training AI and machine learning models, acting as the foundation for many of today’s intelligent systems. From autonomous vehicles to sentiment analysis, well-annotated data is key. As we look to the future, data annotation continues to evolve with the advent of sophisticated tools and techniques like Labelo, an open-source platform designed to improve the accuracy of data labeling. Let’s explore the trends and innovations in data annotation and how tools like Labelo are paving the way for more efficient and precise annotations.

1. Automated Annotation with AI Assistance

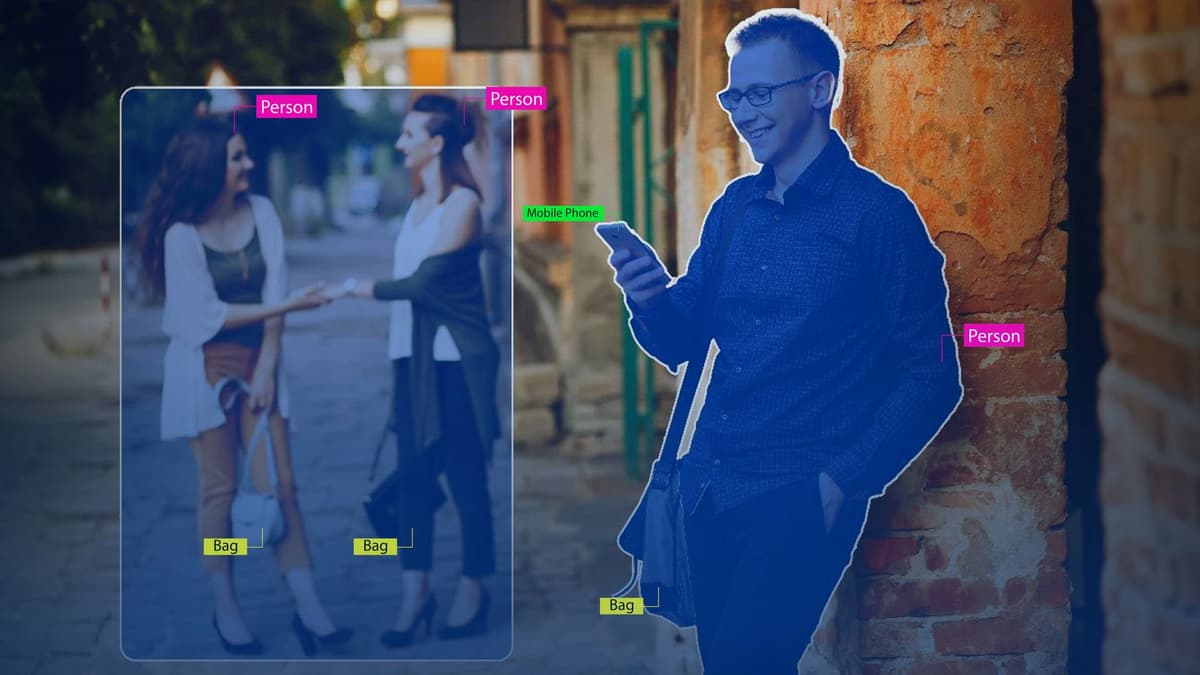

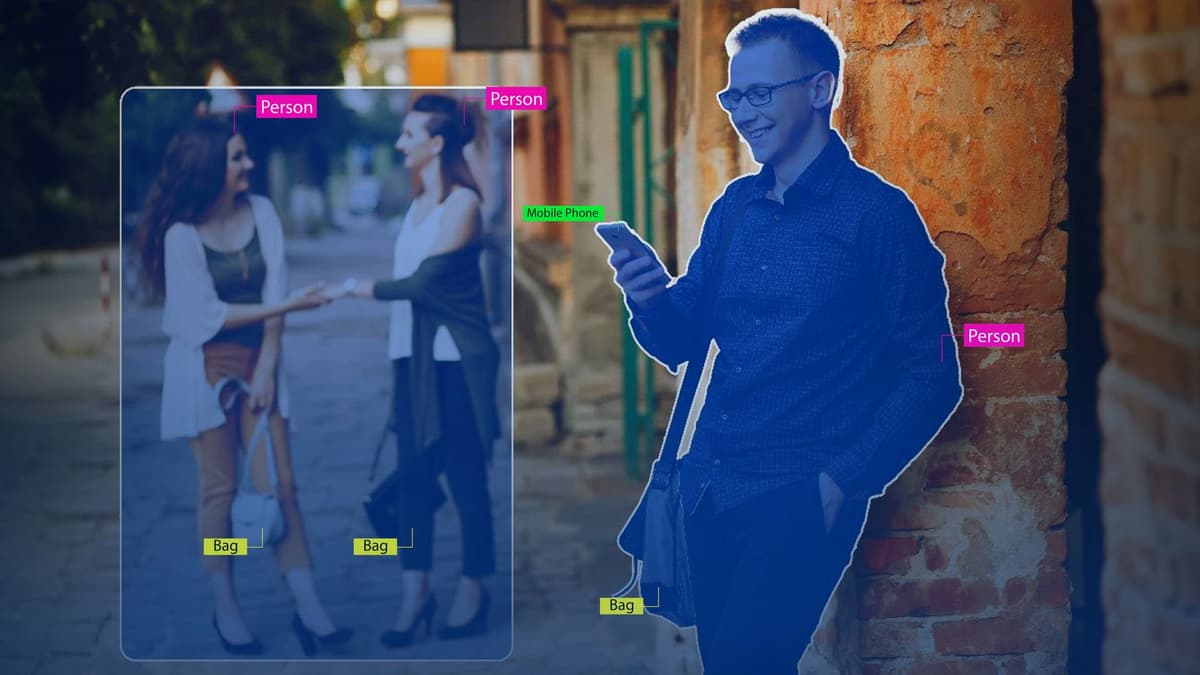

One of the most exciting developments in data annotation is the increasing use of AI-powered tools to streamline the process. Annotation has traditionally been time-consuming, but AI can now assist by generating initial labels based on learned patterns, requiring less human input. For example, AI-powered platforms, such as Labelo, leverage pre-trained models to annotate repetitive data elements, allowing human annotators to focus on refining complex or ambiguous labels.

Labelo’s design integrates seamlessly with AI-assisted annotation, enabling users to train custom models for specific data types. This automation drastically reduces manual effort and accelerates project timelines, making it easier to handle large datasets. As automation tools improve, we’ll likely see “human-in-the-loop” systems where AI does the bulk of the annotation, and human labelers validate and refine the output.

2. 3D and Multimodal Annotation for Complex Data

In fields like autonomous vehicles and AR/VR, there’s a growing need for 3D and multimodal annotation capabilities. Annotating 3D data, such as LiDAR scans, allows for more accurate detection of real-world objects and movement. Annotation tools like Labelo support multimodal data, allowing users to work with various formats and sensory inputs, from image and video to text and audio.

Labelo has been enhanced to handle such multimodal annotation, making it an invaluable tool for industries requiring comprehensive, multi-dimensional labeling. This capability allows companies to annotate 3D point clouds and sync with visual, textual, or audio data, ensuring their models are ready to interpret complex real-world environments effectively.

3. Contextual and Sentiment-Based Annotation for NLP

Natural language processing (NLP) is evolving rapidly, especially in sentiment analysis, conversational AI, and emotional recognition. Annotating such data requires a high degree of contextual understanding. Future NLP annotation will focus on capturing not only words but also their contextual meanings, sentiments, and intentions.

Sentiment-based annotation, for instance, requires the annotator to determine emotional undertones within text or speech, such as sarcasm, humor, or frustration, which may not be easily identifiable by traditional annotation methods. Innovations in annotation platforms are making it easier to incorporate context by allowing annotators to flag sentiment, emotions, and intentions alongside standard linguistic elements.

4. Crowdsourcing for Diverse and Inclusive Data Annotation

One of the biggest challenges in AI today is the lack of diversity in training data, which can result in biased models. To create more inclusive datasets, organizations are increasingly turning to crowdsourcing for data annotation. Crowdsourcing platforms allow annotators from different cultural and linguistic backgrounds to participate in the annotation process, ensuring broader representation.

Future annotation strategies will continue to emphasize inclusivity, recruiting annotators from underrepresented groups and regions to help reduce bias. Moreover, crowdsourcing platforms are incorporating quality checks and incentive structures to ensure high-quality, unbiased data annotation. This approach helps build datasets that better reflect global diversity, ultimately leading to fairer, more accurate AI models.

5. Innovations in Quality Control and Annotation Validation

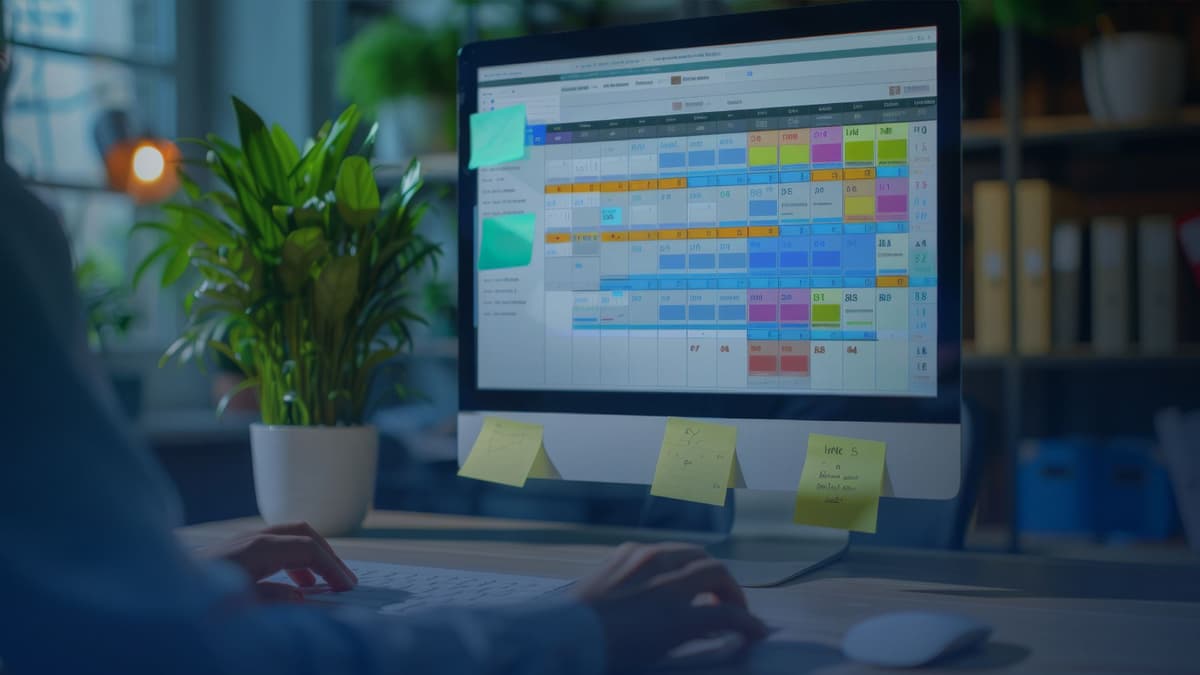

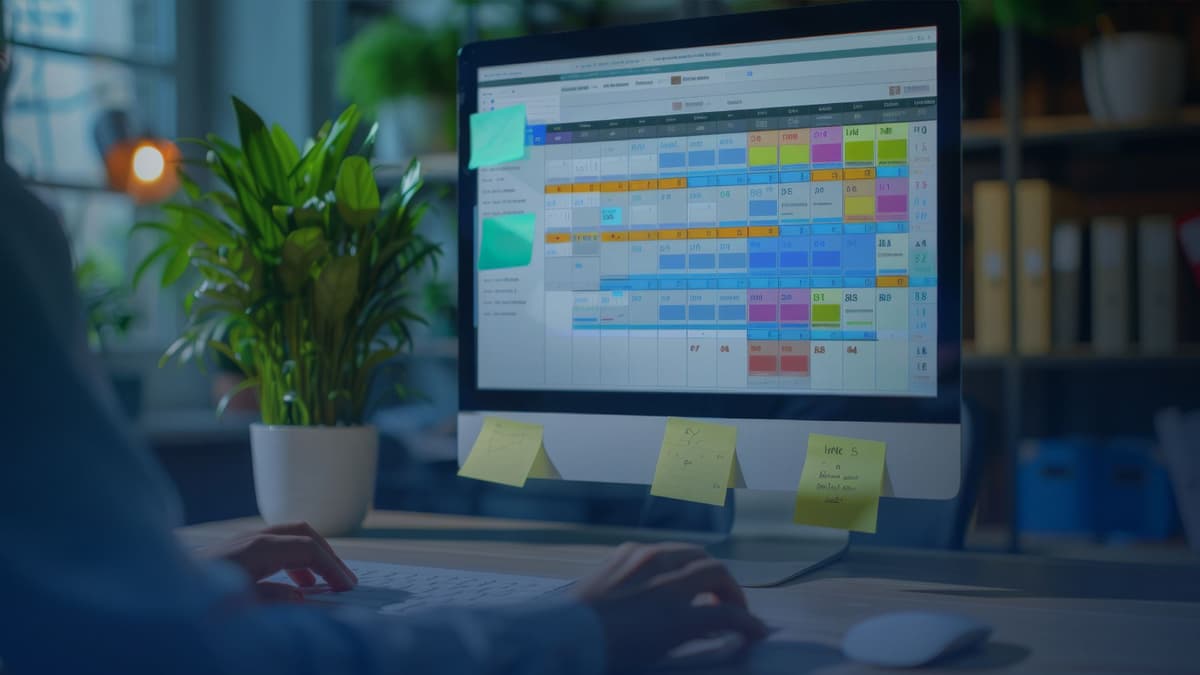

Maintaining data annotation quality is essential to building effective AI models. Advances in quality control mechanisms are helping organizations ensure that their annotated data is both accurate and consistent. These innovations include automated quality checks using AI algorithms, cross-validation techniques, and consensus-based methods where multiple annotators label the same data point to achieve consensus.

Some annotation platforms are now implementing continuous quality monitoring, where AI systems analyze annotation patterns and detect inconsistencies in real time. This system flags potential errors before they are integrated into training datasets, reducing the need for costly rework and ensuring that only high-quality annotations make it into the final model.

6. Ethics and Data Privacy in Annotation

With data privacy concerns at an all-time high, ethical data annotation is more important than ever. Regulations like GDPR in Europe and CCPA in California mandate stringent controls over personal data, impacting how annotation data, especially sensitive information like medical or personal data, is handled.

The future of data annotation will prioritize ethical practices, ensuring that sensitive data is anonymized and private information is protected. Annotation platforms are increasingly incorporating secure environments and compliance mechanisms, enabling annotators to work within regulatory frameworks. Additionally, ethics boards and transparent guidelines for annotators will likely become a standard practice to safeguard individuals’ rights and data privacy.

7. Real-Time Annotation and Self-Learning Systems

As more applications demand real-time processing, the concept of real-time annotation has emerged. In this approach, data is annotated on the fly, providing instant feedback that AI models can use immediately. This real-time annotation is especially useful in areas like cybersecurity, where timely identification of threats is critical, and in customer support, where understanding user sentiment as it happens can improve interactions.

Additionally, self-learning systems are gaining popularity, where annotated data is fed back into the model to help it learn and improve its own annotation capabilities. This feedback loop allows AI models to continuously refine their understanding, making future annotations more accurate and less reliant on human intervention.

Conclusion

The future of data annotation is being shaped by technological innovations and an ever-growing demand for annotated data in various fields. Automation, multimodal annotation, crowdsourcing, real-time processing, and ethical practices are just a few of the trends redefining this space. As we advance, the role of human annotators will shift from labor-intensive tasks to oversight and quality control, while AI-assisted tools will take on the heavy lifting.

Organizations that stay abreast of these trends and invest in cutting-edge annotation solutions will be well-positioned to build smarter, fairer, and more robust AI models. The innovations in data annotation not only promise to enhance the efficiency and quality of AI systems but also to create more inclusive and ethically sound technologies that benefit society at large. In today’s AI-driven world, high-quality labeled data is the backbone of accurate machine learning models. Why Labelo is perfect for annotating diverse data types comes down to its versatility, speed, and precision.

Labelo Editorial Team

May 26, 2025

Related Post

Top 4 Annotating Options to Improve the Annotation Process in Labelo [2025]

How Labelo Can Help With Both Bounding Box & Semantic Segmentation in 2025

What is Data Annotation & Its Types [2025]

How to Assign Annotators & Reviewers to a Task in Labelo

Related Posts

How to Assign Annotators & Reviewers to a Task in Labelo

In the context of data labeling and annotation projects, effective tas...

How Labelo Can Help With Both Bounding Box & Semantic Segmentation in 2025

Data annotation is the backbone of many machine learning and computer ...

What is Data Annotation & Its Types [2025]

Data annotation is the process of tagging, labeling or categorizing da...

What is Data Annotation? A Basic to Advanced Guide for 2025

In the age of artificial intelligence (AI) and machine learning (ML), ...